A robust and efficient test suite is the backbone of a healthy open-source project. It gives developers the confidence to add new features and refactor code without causing regressions. Recently, we’ve merged a significant 19-patch series that begins a much-needed cleanup of our Python test infrastructure, paving the way for faster, more reliable, and more parallelizable tests.

The Old Way: A Monolithic Setup

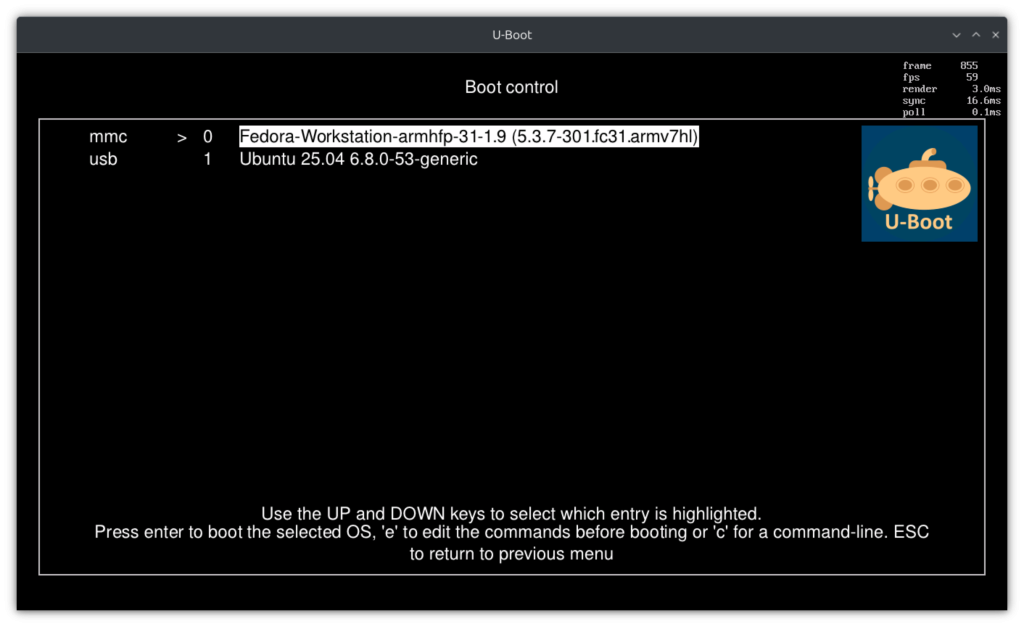

For a long time, many of our tests, particularly those for the bootstd (boot standard) commands, have relied on a collection of disk images. These images simulate various boot scenarios, from Fedora and Ubuntu systems to ChromiumOS and Android partitions.

Our previous approach was to have a single, monolithic test called test_ut_dm_init_bootstd() that would run before all other unit tests. Its only job was to create every single disk image that any of the subsequent tests might need.

While this worked, it had several drawbacks:

- Inefficiency: Every test run created all the images, even if you only wanted to run a single test that didn’t need any of them.

- Hidden Dependencies: The relationship between a test and the image it required was not explicit. If an image failed to generate, a seemingly unrelated test would fail later, making debugging confusing.

- No Parallelism: This setup made it impossible to run tests in parallel (

make pcheck). Many tests implicitly depended on files created by other tests, a major barrier to parallel execution.

- CI Gaps: Commands like

make qcheck (quick check) and make pcheck were not being tested in our CI, which meant they could break and remain broken for long periods.

A New Direction: Embracing Test Fixtures

The long-term goal is to move away from this monolithic setup and towards using proper test fixtures. In frameworks like pytest (which we use), a fixture is a function that provides a well-defined baseline for tests. For us, this means a fixture would create a specific disk image and provide it directly to the tests that need it, and only those tests.

This 19-patch series is the first major step in that direction.

The Cleanup Process: A Three-Step Approach

The series can be broken down into three main phases of work.

1. Stabilization and Bug Squashing

Before making big changes, we had to fix the basics. The first few patches were dedicated to getting make qcheck to pass reliably. This involved:

- Disabling Link-Time Optimization (LTO), which was interfering with our event tracing tools (Patch 1/19).

- Fixing a memory leak in the VBE test code (Patch 2/19).

- Standardizing how we compile device trees in tests to fix path-related issues (Patch 3/19).

2. Decoupling Dependent Tests

A key requirement for parallel testing is that each test must be self-contained. We found a great example of a dependency where test_fdt_add_pubkey() relied on cryptographic keys created by an entirely different test, test_vboot_base().

To fix this, we first moved the key-generation code into a shared helper function (Patch 6/19). Then, we updated test_fdt_add_pubkey() to call this helper itself, ensuring it creates all the files it needs to run (Patch 7/19). This makes the test independent and ready for parallel execution.

3. Preparing for Fixtures by Refactoring

The bulk of the work in this series was a large-scale refactoring of all our image-creation functions. Previously, functions like setup_fedora_image() took a ubman object as an argument. This ubman is a function-scoped fixture, meaning it’s set up and torn down for every single test. This is not suitable for creating images, which we’d prefer to do only once per test session.

The solution was to change the signature of all these setup functions. Instead of: def setup_fedora_image(ubman):

They now accept the specific dependencies they actually need: def setup_fedora_image(config, log, ...):

This was done for every image type: Fedora, Ubuntu, Android, ChromiumOS, EFI, and more. This change decouples the image creation logic from the lifecycle of an individual test run, making it possible for us to move this code into a session-scoped fixture in the future.

What’s Next?

This series has laid the groundwork. The immediate bugs are fixed, tests are more independent, and the code is structured correctly. The next step will be to complete the transition by creating a session-scoped pytest fixture that handles all this image setup work once at the start of a test run.

This investment in our test infrastructure will pay dividends in the form of faster CI runs, a more pleasant developer experience, and a more stable and reliable U-Boot. Happy testing! 🌱